Easier way

Thursday, October 18, 2007

Tar and Gzip in one command

Posted by iCehaNgeR's hAcK NoteS at 7:31 AM 0 comments

Monday, October 15, 2007

How to Escape the SAX parser from wild characters

SAX parser give you a exception if your string contain a & parameter. This character is normal on a URL when parsing URL's this could be headache work around is . convert & with & amp ; all without space

Posted by iCehaNgeR's hAcK NoteS at 8:11 AM 0 comments

Tuesday, October 2, 2007

Listing your drives

File []file=File.listRoots(); for (File fl : file){ System.out.println(fl.getPath()); }

Posted by iCehaNgeR's hAcK NoteS at 1:28 PM 0 comments

Friday, September 28, 2007

Dual Boot XP and Ubuntu 7.04 with NTDLR

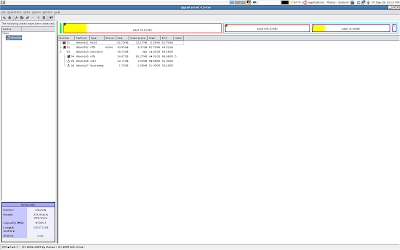

My current System partion after the dual boot configuration.

Tools You Needed and Handy at all situations.

Windows Installation CD (For safety)

Ubuntu Installation CD

GRUB rescue CD http://supergrub.forjamari.linex.org/

Linux Rescue CD http://www.sysresccd.org/Main_Page

USB drive.

My System Configuration

Dell Precision M90

Dual Processor

100GB hardisk.

Partitioning

There current partition have

62.72 MB Fat16 this came up with the System where Dell stores its utils.

44.01GB of NTFS and this is Boot Active Partition where all windows files go and it is named C:\

49.15 GB of NTFS which is empty and named D:\

If you need to format or shrink the partition from windows at initial in any other way you can do it from MMC and select disk partitioning. Just type MMC on the run mode.

The whole point here is how to install UBUNTU on windows without affect the current MBR configuration. We wont disturb the windows MBR at any point of time.

Now we will boot the system with the linux Rescue CD.

Press enter at the end of the prompts and type startx

This will take you to the Graphical screen. Select the Disk partition Utility and re-partition your D:\ drive with two linux partitions.

we will shrink it D:\ with

24.67 GB of NTFS

22.7GB with ext3

1.76 GB with linux-swap

Save the workspace and quit and come out of the os by halt or shutdown.

Thats it with partitioning for now. :-)

Now Boot the System with the linux install CD.

just do all the step until partition

Select Manual option

give the partition names we partitioned earlier. Like the ext3 and linux-swap.

use the ext3 as your root partition. OK ubuntu will complaint now that its not formated check the format option there so that UBUNTU will format the root partition again.

At the end of partition select the Advance option. This is the most confusing part in Ubuntu 7.04 it was not there in 6.10 or previous versions. When you click advance it will give a value (hda0) . Actualy it is the place where you going to install your GRUB if you dont change the parameter your an deep trouble it will install in the MBR and wipe out windows MBR no more windows again until u fix the MBR using the Windows Resucue CD the command is MBRFIX it will wipe out the GRUB additions.

Back to the GRUB install in the advance option you have to tell UBUNTU you are going to install the GRUB on your LINUX partition that is /dev/sda6 in my case. In previous version we could tell just like that now in the 7.04 we have to tell it as this way.

(hda0,5)

That means you are going to install the GRUB on your first hard Disk = hda0

and your 5th partition that is 5.

Now complete the installation.

Before your reboot you can go to the console and type in this

dd if=/dev/sda6 of=/media/device/linux.bin bs=512 os=2

this will create a linux.bin file in your USB drive /media/device is my usb drive mount path.

If your lucky and did't do anything wrong after your reboot you should be able to get into your windows. If your are not able to Windows but able to get into your Ubunut that means.

You have wiped out your MBR.

OR

Your boot partition is not active instead your Linux partition is active.

If it is MBR problem use windows rescue cd to fix it.

If the boot partition is not active use the Linux rescue CD and activate the Windows partition active boot.

Now get into windows and change the boot.ini in C:\ drive you have make the hidden and system files visible. You can do that from the folder options.

now edit it the boot.ini with

C:\linux.bin "Linux" as your last lin and save This is how we tell NTDLR to boot the linux using its GRUB.

At this point you have another option if you did't have a usb drive or you forget to issue the dd command you have tool called bootpart

What it will do is it will create the linux.bin file and add it to the boo.ini no manual editing the file cool, bad part about is it will add a url and some text when you load. Free advertisement. blaaaaaaa.

Now reboot the system and see the two option XP and Linux select Linux if its loading and booting your successful in your effort.

If not you may have to do one more thing to do. Your GRUB installation is curropted. You have to re-install the GRUB. This where your Super GRUB comes in help.

boot again with SuperGrub

type C

your at the grub prompt now issue this.

find /boot/grup/stage1 this will tell you where your grub is installed.

it will give (hda0,5)

Now try to boot your grub with the boot option from the SuperGrub and give it the place where your GRUB is installed, we found it earlier using the find command.

if it errors out like no executable or end line corupted, You have to re-install the GRUB not a big thing.

type

root (hda0,5)

setup (hda0,5)

quit

Make sure you have a space between root and (, otherwise it will tell command not found.

now Your GRUB is re-installed. boot the GRUB again with partition loader. This time it should work.

My current System partion after the dual boot configuration.

Tools You Needed and Handy at all situations.

Windows Installation CD (For safety)

Ubuntu Installation CD

GRUB rescue CD http://supergrub.forjamari.linex.org/

Linux Rescue CD http://www.sysresccd.org/Main_Page

USB drive.

My System Configuration

Dell Precision M90

Dual Processor

100GB hardisk.

Partitioning

There current partition have

62.72 MB Fat16 this came up with the System where Dell stores its utils.

44.01GB of NTFS and this is Boot Active Partition where all windows files go and it is named C:\

49.15 GB of NTFS which is empty and named D:\

If you need to format or shrink the partition from windows at initial in any other way you can do it from MMC and select disk partitioning. Just type MMC on the run mode.

The whole point here is how to install UBUNTU on windows without affect the current MBR configuration. We wont disturb the windows MBR at any point of time.

Now we will boot the system with the linux Rescue CD.

Press enter at the end of the prompts and type startx

This will take you to the Graphical screen. Select the Disk partition Utility and re-partition your D:\ drive with two linux partitions.

we will shrink it D:\ with

24.67 GB of NTFS

22.7GB with ext3

1.76 GB with linux-swap

Save the workspace and quit and come out of the os by halt or shutdown.

Thats it with partitioning for now. :-)

Now Boot the System with the linux install CD.

just do all the step until partition

Select Manual option

give the partition names we partitioned earlier. Like the ext3 and linux-swap.

use the ext3 as your root partition. OK ubuntu will complaint now that its not formated check the format option there so that UBUNTU will format the root partition again.

At the end of partition select the Advance option. This is the most confusing part in Ubuntu 7.04 it was not there in 6.10 or previous versions. When you click advance it will give a value (hda0) . Actualy it is the place where you going to install your GRUB if you dont change the parameter your an deep trouble it will install in the MBR and wipe out windows MBR no more windows again until u fix the MBR using the Windows Resucue CD the command is MBRFIX it will wipe out the GRUB additions.

Back to the GRUB install in the advance option you have to tell UBUNTU you are going to install the GRUB on your LINUX partition that is /dev/sda6 in my case. In previous version we could tell just like that now in the 7.04 we have to tell it as this way.

(hda0,5)

That means you are going to install the GRUB on your first hard Disk = hda0

and your 5th partition that is 5.

Now complete the installation.

Before your reboot you can go to the console and type in this

dd if=/dev/sda6 of=/media/device/linux.bin bs=512 os=2

this will create a linux.bin file in your USB drive /media/device is my usb drive mount path.

If your lucky and did't do anything wrong after your reboot you should be able to get into your windows. If your are not able to Windows but able to get into your Ubunut that means.

You have wiped out your MBR.

OR

Your boot partition is not active instead your Linux partition is active.

If it is MBR problem use windows rescue cd to fix it.

If the boot partition is not active use the Linux rescue CD and activate the Windows partition active boot.

Now get into windows and change the boot.ini in C:\ drive you have make the hidden and system files visible. You can do that from the folder options.

now edit it the boot.ini with

C:\linux.bin "Linux" as your last lin and save This is how we tell NTDLR to boot the linux using its GRUB.

At this point you have another option if you did't have a usb drive or you forget to issue the dd command you have tool called bootpart

What it will do is it will create the linux.bin file and add it to the boo.ini no manual editing the file cool, bad part about is it will add a url and some text when you load. Free advertisement. blaaaaaaa.

Now reboot the system and see the two option XP and Linux select Linux if its loading and booting your successful in your effort.

If not you may have to do one more thing to do. Your GRUB installation is curropted. You have to re-install the GRUB. This where your Super GRUB comes in help.

boot again with SuperGrub

type C

your at the grub prompt now issue this.

find /boot/grup/stage1 this will tell you where your grub is installed.

it will give (hda0,5)

Now try to boot your grub with the boot option from the SuperGrub and give it the place where your GRUB is installed, we found it earlier using the find command.

if it errors out like no executable or end line corupted, You have to re-install the GRUB not a big thing.

type

root (hda0,5)

setup (hda0,5)

quit

Make sure you have a space between root and (, otherwise it will tell command not found.

now Your GRUB is re-installed. boot the GRUB again with partition loader. This time it should work.

Posted by iCehaNgeR's hAcK NoteS at 8:17 AM 1 comments

Wednesday, September 26, 2007

For Each Loop

Iterating over a collection is uglier than it needs to be. Consider the following method, which takes a collection of timer tasks and cancels them:

void cancelAll(Collectionc) { for (Iterator i = c.iterator(); i.hasNext(); ) i.next().cancel(); }

The iterator is just clutter. Furthermore, it is an opportunity for error. The iterator variable occurs three times in each loop: that is two chances to get it wrong. The for-each construct gets rid of the clutter and the opportunity for error. Here is how the example looks with the for-each construct:

void cancelAll(Collectionc) { for (TimerTask t : c) t.cancel(); }

When you see the colon (:) read it as “in.” The loop above reads as “for each TimerTask t in c.” As you can see, the for-each construct combines beautifully with generics. It preserves all of the type safety, while removing the remaining clutter. Because you don't have to declare the iterator, you don't have to provide a generic declaration for it. (The compiler does this for you behind your back, but you need not concern yourself with it.)

Posted by iCehaNgeR's hAcK NoteS at 8:46 AM 0 comments

Monday, September 24, 2007

Thursday, August 23, 2007

Collection

| Interfaces | Implementations | ||||

|---|---|---|---|---|---|

| Hash table | Resizable array | Tree | Linked list | Hash table + Linked list | |

Set | HashSet | TreeSet | LinkedHashSet | ||

List | ArrayList | LinkedList | |||

Queue | |||||

Map | HashMap | TreeMap | LinkedHashMap | ||

Posted by iCehaNgeR's hAcK NoteS at 4:27 PM 0 comments

SAX V/S DOM

Instead of reading a document one piece at a time (as with SAX), a DOM parser reads an entire document. It then makes the tree for the entire document available to program code for reading and updating. Simply put, the difference between SAX and DOM is the difference between sequential, read-only access, and random, read-write access.

When to use DOM

If your XML documents contain document data (e.g., Framemaker documents stored in XML format), then DOM is a completely natural fit for your solution. If you are creating some sort of document information management system, then you will probably have to deal with a lot of document data. An example of this is the Datachannel RIO product, which can index and organize information that comes from all kinds of document sources (like Word and Excel files). In this case, DOM is well suited to allow programs access to information stored in these documents.

However, if you are dealing mostly with structured data (the equivalent of serialized Java objects in XML) DOM is not the best choice. That is when SAX might be a better fit.

If the information stored in your XML documents is machine readable (and generated) data then SAX is the right API for giving your programs access to this information. Machine readable and generated data include things like:

Posted by iCehaNgeR's hAcK NoteS at 4:21 PM 0 comments

Tuesday, July 24, 2007

Find and Remove files recusively Linux

find ./ -name subsub* -ok rm -r {} \;

find ./ -name *.class -ok rm -r {} \;

Will search for any file or (sub)dir and delete it after asking politly…

find ./ -name sub* -exec rm -rf {} \;

Will search for any file or (sub)dir, delete it and won’t tell anyone at all…

The trick is, the {} in the rm command, will be repaced by what ever the find commands finds… (obviously, you dont have to delete..Posted by iCehaNgeR's hAcK NoteS at 9:11 AM 0 comments

LDAP SCHEMA DESIGN

COLLEGE LDAP Schema - CASE STUDY

Description

This document describes about design of ldap schema for a college administration department. College will have various departments like mechanical, chemical, Electronics, civil etc.. Each department will of various branches. For example electronics department can have Electronics & Communication Engineering, Electronics & Electrical Engineering, Electronics & Instrumentation Engineering, Computer Science Engineering, Computer Science & Information Technology, Electronics & Computer Science etc.. Each branch will have in charge, number of students, teaching and non teaching staff. Each student will be attending set of subjects for an academic year. Each teaching staff & non teaching staff will have salary details. Framing the requirements into hierarchical structure, following LDAP tree depicts the tree structure.

Note: the oids used in this article are selected randomly and they are for temporary use and please don't use oid's mentioned in this article. Use your own oids. The oids in this article will be changed in the next release

Schema design

From the above description, the objectclasses identified are COLLEGE, DEPARTMENT, BRANCH, ACADEMIC, STUDENT, SUBJECTS, STAFF and SALARY and each object class consists of following attributes.

| COLLEGE | DEPARTMENT | BRANCH | ACADEMIC | STUDENT | ||||

| collegeName | deptName | branchName | academicYear | studentName | ||||

| collegePrincipal | deptHead | BranchHead | studentNumber | |||||

| collegePresident | branchNumberOfStudents | studentAddress | ||||||

| collegeSecretary | studentAcademicYear | |||||||

| collegeBoardMember | studemtCourse | |||||||

| studentStatus | ||||||||

| studentYear | ||||||||

| SUBJECTS | STAFF | SALARY | ||

| subjectName | staffName | salaryBASIC | ||

| subjectMarks | staffID | salaryDA | ||

| subjectCode | staffAddress | salaryHRA | ||

| staffQualification | salaryAllowance | |||

| staffDesignation | salaryTotal | |||

| staffBranch | ||||

| staffType |

The top node is college with unique DN as o=college. College will have different departments like Civil, Electronics, Mechanical etc.. The number of child nodes depends on the number of departments in the college. Let us take, college has two departments Civil and Electronics. Then the o=college parent node will have two child nodes deptName=Civil and deptName=Electronics. Each node can be identified as deptName=Civil,o=college and deptName=Electronics,o=college. If searched with DN deptName=Electronics,o=college, attributes at this entry will be retrieved. The subtree is

o=college | --------------------------------- | | deptName=Civil deptName=Electronics

Consider the department Electronics has three branches ECE, EEE & CSE. The DN for each branch will be branchName=ECE,deptName=Electronics,o=college, branchName=EEE,,deptName=Electronics,o=college and branchName=CSE,,deptName=Electronics,o=college.

Now take the branch ECE, each branch will have students and staff. Each student belongs to a particular year and each staff belongs to either teaching or non-teaching staff. So consider the sub tree under the dn branchName=ECE,deptName=Electronics,o=college is branchName=ECE | ----------------------------------------------------- | | ou=student ou=staff | | ------------------------------ p; --------------------------- | | | academicYear=1999-2000 academicaYear=2000-2001 ou=teaching ou=nonteaching

Each academicYear consists of students and each student will be studying the subjects, each staff will have salary details and subjects he/she is handling. The subtrees for academicYear, teaching and nonteaching are

ademicYear=1999-2000 ou=teaching ou=nonteaching | | | ---------------------------- & ----------------- ; ----------------- | | | | | | studentNumber=100 studentNumber=101 staffID=200 staffID201 staffID=300 staffID=301 | | | ---------------------- & ----------------- ou=Salary Details | | | | subjectCode=001 subjectCode=002 ou=Salary Details subjectCode=001

COLLEGE LDAP Tree

o=college | --------------------------------- | | deptName=Civil deptName=Electronics | ------------------------------------------ | | | branchName=EEE branchName=ECE branchName=CSE | ----------------------------------------------------- | | ou=student ou=staff | | ------------------------------ p; --------------------------- | | | academicYear=1999-2000 academicaYear=2000-2001 ou=teaching ou=nonteaching | | | ---------------------------- & ----------------- ; ----------------- | | | | | | studentNumber=100 studentNumber=101 staffID=200 staffID201 staffID=300 staffID=301 | | | ---------------------- & ----------------- ou=Salary Details | | | | subjectCode=001 subjectCode=002 ou=Salary Details subjectCode=001

Attributes

This section contains attribute file for College LDAP tree

attributetype ( 1.3.6.1.4.1.15490.1.1 NAME 'collegeName' DESC 'college name' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.2 NAME 'collegePrincipal' DESC 'college principal name' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.3 NAME 'collegePresident' DESC 'college president' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.4 NAME 'collegeSecretary' DESC 'college secretary name' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.5 NAME 'collegeBoardMember' DESC 'board member name' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 ) attributetype ( 1.3.6.1.4.1.15490.1.6 NAME 'deptName' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.7 NAME 'deptHead' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.8 NAME 'branchName' DESC 'board member name' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE) attributetype ( 1.3.6.1.4.1.15490.1.9 NAME 'branchHead' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.10 NAME 'branchNumberOfStudents' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.11 NAME 'studentName' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.12 NAME 'studentNumber' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15) attributetype ( 1.3.6.1.4.1.15490.1.13 NAME 'studentAddress' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.14 NAME 'studentAcademicYear' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.15 NAME 'studentCourse' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE) attributetype ( 1.3.6.1.4.1.15490.1.16 NAME 'studentStatus' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.17 NAME 'studentYear' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.18 NAME 'subjectName' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.19 NAME 'subjectMarks' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.20 NAME 'subjectCode' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.21 NAME 'staffName' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.22 NAME 'staffID' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.23 NAME 'staffAddress' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.24 NAME 'staffQualification' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.25 NAME 'staffDesignation' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.26 NAME 'staffDepartment' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.27 NAME 'staffBranch' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.28 NAME 'staffType' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.29 NAME 'salaryBasic' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.30 NAME 'salaryDA' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.31 NAME 'salaryHRA' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.32 NAME 'salaryAllowance' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.33 NAME 'salaryTotal' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE ) attributetype ( 1.3.6.1.4.1.15490.1.34 NAME 'academicYear' DESC 'description' EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15 SINGLE-VALUE )

Object class

This section gives objectclass details for College LDAP tree. objectclass ( 1.3.6.1.4.1.15490.2.1 NAME 'objCollege' SUP top STRUCTURAL DESC 'object class' MUST (collegeName $ collegePrincipal $ collegePresident $ collegeSecretary ) MAY (collegeBoardMember ) ) objectclass ( 1.3.6.1.4.1.15490.2.2 NAME 'objDepartment' SUP top STRUCTURAL DESC 'object class' MUST (deptName $ deptHead ) ) objectclass ( 1.3.6.1.4.1.15490.2.3 NAME 'objBranch' SUP top STRUCTURAL DESC 'object class' MUST (branchHead $ branchName $ branchNumberOfStudents ) ) objectclass ( 1.3.6.1.4.1.15490.2.4 NAME 'objStudent' DESC 'object class' SUP top STRUCTURAL MUST ( studentName $ studentNumber $ studentAddress $ studentAcademicYear $ studentCourse $ studentStatus $ studentYear ) ) objectclass ( 1.3.6.1.4.1.15490.2.5 NAME 'objSubject' DESC 'object class' SUP top STRUCTURAL MUST ( subjectCode $subjectName $ subjectMarks ) ) objectclass ( 1.3.6.1.4.1.15490.2.6 NAME 'objStaff' DESC 'object class' SUP top STRUCTURAL MUST ( staffName $ staffID $ staffAddress $ staffQualification $ staffDepartment $ staffBranch $ staffType) ) objectclass ( 1.3.6.1.4.1.15490.2.7 NAME 'objSalary' SUP top STRUCTURAL DESC 'object class' MUST ( salaryBASIC $ salaryDA $ salaryHRA $ salaryAllowance $ salaryTotal) ) objectclass ( 1.3.6.1.4.1.15490.2.8 NAME 'objAcademic' DESC 'object class' SUP top STRUCTURAL MUST ( academicYear ) )

Sample LDIF File

This section gives sample ldif file for creating LDAP tree,

dn: o=college o: college objectclass: organization objectclass: objCollege objectclass: top Description: top object class collegeName: ABC Engineering college collegePrincipal: John Peter collegePresident: Samual A collegeSecretary: Benson K collegeBoardMember: Jennifer King collegeBoardMember: George K Danial

dn: deptName=Civil,o=college deptName: Civil objectclass: objDepartment objectclass: top deptHead: abc

dn: deptName=Electronics,o=college deptName: Civil objectclass: objDepartment objectclass: top deptHead=defg

dn: branchName=ECE,deptName=Electronics,o=college branchName: ECE objectclass: top objectclass: objBranch branchHead: kkk branchNumberOfStudents: 50

dn: ou=student,branchName=ECE, deptName=Electronics,o=college ou: student objectclass: top objectclass: organizationalUnit

dn: ou=staff,branchName=ECE, deptName=Electronics,o=college ou: staff objectclass: top objectclass: organizationalUnit

dn: academicYear=1999-2000,ou=student,branchName=ECE, deptName=Electronics,o=college academicYear: 1999-2000 objectclass: top objectclass: objAcademic

dn: academicYear=2000-2001,ou=student,branchName=ECE, deptName=Electronics,o=college academicYear: 2000-2001 objectclass: top objectclass: objAcademic

dn: studentNumber=100,academicYear=1999-2000,ou=student,branchName=ECE, deptName=Electronics,o=college studentNumber: 100 objectclass: top objectclass: objStudent studentName: abc studentAddress: abc studentAcademicYear: 1999-2000 studentCourse: BS studentStatus: Active studentYear: 1999

dn: studentNumber=101,academicYear=1999-2000,ou=student,branchName=ECE, deptName=Electronics,o=college studentNumber: 101 objectclass: top objectclass: objStudent studentName: def studentAddress: def studentAcademicYear: 1999-2000 studentCourse: BS studentStatus: Active studentYear: 1999

dn: subjectCode=001,studentNumber=101,academicYear=1999-2000,ou=student,branchName=ECE, deptName=Electronics,o=college subjectCode: 001 objectclass: top objectclass: objSubject subjectName: Mathematics-1 subjectMarks: 90

dn: subjectCode=002,studentNumber=101,academicYear=1999-2000,ou=student,branchName=ECE, deptName=Electronics,o=college subjectCode: 002 objectclass: top objectclass: objSubject subjectName: Electrical Technology subjectMarks: 85

dn: ou=teaching,ou=staff,branchName=ECE, deptName=Electronics,o=college ou: teaching objectclass: top objectclass: organizationalUnit

dn: ou=nonteaching,ou=staff,branchName=ECE, deptName=Electronics,o=college ou: nonteaching objectclass: top objectclass: organizationalUnit

dn: staffID=001,ou=teaching,ou=staff,branchName=ECE, deptName=Electronics,o=college staffID: 001 objectclass: top objectclass: objStaff staffName: abc

staffAddress: sdflasjflasd staffQualification: MS, Phd staffDepartment: Electronics staffBranch: ECE staffType: teaching

dn: staffID=002,ou=teaching,ou=staff,branchName=ECE, deptName=Electronics,o=college staffID: 002 objectclass: top objectclass: objStaff staffName: def staffAddress: saas staffQualification: MS, Phd staffDepartment: Electronics staffBranch: ECE staffType: teaching

dn: staffID=101,ou=nonteaching,ou=staff,branchName=ECE, deptName=Electronics,o=college staffID: 101 objectclass: top objectclass: objStaff staffName: abc staffAddress: sdflasjflasd staffQualification: BS staffDepartment: Electronics staffBranch: ECE staffType: nonteaching

dn: staffID=102,ou=nonteaching,ou=staff,branchName=ECE, deptName=Electronics,o=college staffID: 102 objectclass: top objectclass: objStaff staffName: def staffAddress: saas staffQualification: B.Com staffDepartment: Electronics staffBranch: ECE staffType: nonteaching

dn: ou=Salary Details,staffID=002,ou=teaching,ou=staff,branchName=ECE, deptName=Electronics,o=college ou: Salary Details objectclass: top objectclass: objSalary objectclass: organizationalUnit salaryBasic: 10000 salaryDA: 20000 salaryHRA: 4000 salaryAllowance: 6000 salaryTotal: 40000

dn: subjectCode=001,staffID=002,ou=teaching,ou=staff,branchName=ECE, deptName=Electronics,o=college subjectCode: 001 objectclass: top objectclass: objSubject subjectName: Electrinics-1 subjectMarks: 100

dn: ou=Salary Details,staffID=102,ou=nonteaching,ou=staff,branchName=ECE, deptName=Electronics,o=college ou: Salary Details objectclass: top objectclass: objSalary objectclass: organizatinalUnit salaryBasic: 1000 salaryDA: 2000 salaryHRA: 400 salaryAllowance: 600 salaryTotal: 4000

Posted by iCehaNgeR's hAcK NoteS at 8:16 AM 0 comments

LDAP

Use ApacheDS server for the LDAP server.

Designing an LDAP application.

Install the LDAP Server.

Install the LDAP client Browser.

The default Bind DN

uid=admin,ou=system

and password secret

Edit the password if needed.

Create the Root Suffix or the new Partion.

Objects

A directory service is an extension of a naming service. In a directory service, an object is also associated with a name. However, each object is allowed to have attributes. You can look up an object by its name; but you can also obtain the object's attributes or search for the object based on its attributes.

The object classes for all objects in the directory form a class hierarchy. The classes "top" and "alias" are at the root of the hierarchy. For example, the "organizationalPerson" object class is a subclass of the "Person" object class, which in turn is a subclass of "top". When creating a new LDAP entry, you must always specify all of the object classes to which the new entry belongs. Because many directories do not support object class subclassing, you also should always include all of the superclasses of the entry. For example, for an "organizationalPerson" object, you should list in its object classes the "organizationalPerson", "person", and "top" classes.

Add the Attributes.

An attribute of a directory object is a property of the object. For example, a person can have the following attributes: last name, first name, user name, email address, telephone number, and so on. A printer can have attributes like resolution, color, and speed.

An attribute has an identifier which is a unique name in that object. Each attribute can have one or more values. For instance, a person object can have an attribute called LastName. LastName is the identifier of an attribute. An attribute value is the content of the attribute. For example, the LastName attribute can have a value like "Martin".

Define the Tree.

Write the Search and browse application.

Reference

http://java.sun.com/products/jndi/tutorial/ldap/schema/object.html

First Steps in LDAP

I want to have a simple addressbook with telephonenumbers and email-addresses to be reached from every mail-client I use. The addressbook shall be built and modified automatically from a database which is the main datasource.

* Structure

* Preparing LDAP-server

* Creating Organization Units

* Create people in ou=people,dc=zirndorf,dc=de

* Commands to delete and modify records

* Query the LDAP-database from your mailprogram, how to configure

Structure

Data must be structured for LDAP. Our internet-domain is zirndorf.de, so I use that. Under that there is a unit in which all the people are.

simple_structure.gif

Preparing LDAP-server

I use Debian-Linux for the LDAP-server and install this packages:slapd, ldap-utils. Take care that these files get installed in /etc/ldap/schema/:

* core.schema

* cosine.schema

* inetorgperson.schema

* nis.schema

Now I have to modify the file /etc/ldap/sladp.conf:

# Schema and objectClass definitions

include /etc/ldap/schema/core.schema

include /etc/ldap/schema/cosine.schema

include /etc/ldap/schema/nis.schema

include /etc/ldap/schema/inetorgperson.schema

...

# The base of your directory

suffix "dc=zirndorf,dc=de"

rootdn "cn=admin,dc=zirndorf,dc=de"

# this really means, that the password is "secret"

rootpw secret

# you can create a crypted password like this: slappasswd -u

# and get the crypted version on your terminal:

# rootpw {SSHA}8e8vfyo0KSWoLbyPVIPaG+MqH6h51Vst

The server should listen only to a special IP-address (slapd is running under vserver-Linux) so I have to start the server like this in the start scripts:

/usr/sbin/slapd -h ldap://10.1.1.138:389/

Starting LDAP (and checking bind with "netstat -ln" if you like).

Creating Organization Units

Create a file ou_people.ldif like this:

# file ou_people.ldif

dn: ou=people,dc=zirndorf,dc=de

ou: people

objectClass: top

objectClass: organizationalUnit

and import it into the database

# ldapadd -a -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

-f /tmp/ou_people.ldif

Did the import work? You can dump your whole LDAP-server with this command to check it:

# ldapsearch -x -b 'dc=zirndorf,dc=de' 'objectclass=*' -h ldap.zdf

...

dn: ou=people,dc=zirndorf,dc=de

ou: people

objectClass: top

objectClass: organizationalUnit

...

If you have more units like this create more line in ou_people.ldif, some copy the lines I gave you.

Create people in ou=people,dc=zirndorf,dc=de

First create a very simple file to import into LDAP. I did this with Perl from a centralized database where I have all the informations collected:

# the unique name in the directory

dn: cn=Roland Wende, ou=people, dc=zirndorf, dc=de

ou: people

# which schemas to use

objectClass: top

objectClass: person

objectClass: organizationalPerson

objectClass: inetOrgPerson

# the data itself, name-data

cn: Roland Wende

gn: Roland

sn: Wende

# other data (could be more, but doesn't have to be more)

mail: roland.wende@zirndorf.de

telephoneNumber: 9600-190

Now let's create a person with more attributes:

# the unique name in the directory

dn: cn=Richard Lippmann,ou=people,dc=zirndorf,dc=de

# which schema to use

objectClass: top

objectClass: person

objectClass: organizationalPerson

objectClass: inetOrgPerson

cn: Richard Lippmann

givenName: Richard

sn: Lippmann

# internet section

mail: lippmann@zirndorf.de

mail: horshack@lisa.franken.de

# address section, private

postalAddress: My Way 5

postalCode: 90522

l: Oberasbach

# phone section

homePhone: 0911 /123 456 789

mobile: 0179 / 123 123 123

Commands to delete and modify records

To delete a record this you have to know the dn (unique record-identifier):

# ldapdelete -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

'cn=Roland Wende,ou=People,dc=zirndorf,dc=de'

Recursively delete: ldapdelete -r ...

If you want to modify a record you have to modify it completely. ALL the attributes must be in your ldif-file!

# ldapmodify -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

-f /tmp/ou_people.ldif

Query the LDAP-database from your mailprogram, how to configure

You have to know:

* your LDAP-server's hostname (ldap.zdf)

* your base-DSN (dc=zirndorf,dc=de)

* Port (389 for cleartext)

Data must be structured for LDAP. Our internet-domain is zirndorf.de, so I use that. Under that there is a unit in which all the people are.

simple_structure.gif

Preparing LDAP-server

I use Debian-Linux for the LDAP-server and install this packages:slapd, ldap-utils. Take care that these files get installed in /etc/ldap/schema/:

* core.schema

* cosine.schema

* inetorgperson.schema

* nis.schema

Now I have to modify the file /etc/ldap/sladp.conf:

# Schema and objectClass definitions

include /etc/ldap/schema/core.schema

include /etc/ldap/schema/cosine.schema

include /etc/ldap/schema/nis.schema

include /etc/ldap/schema/inetorgperson.schema

...

# The base of your directory

suffix "dc=zirndorf,dc=de"

rootdn "cn=admin,dc=zirndorf,dc=de"

# this really means, that the password is "secret"

rootpw secret

# you can create a crypted password like this: slappasswd -u

# and get the crypted version on your terminal:

# rootpw {SSHA}8e8vfyo0KSWoLbyPVIPaG+MqH6h51Vst

The server should listen only to a special IP-address (slapd is running under vserver-Linux) so I have to start the server like this in the start scripts:

/usr/sbin/slapd -h ldap://10.1.1.138:389/

Starting LDAP (and checking bind with "netstat -ln" if you like).

Creating Organization Units

Create a file ou_people.ldif like this:

# file ou_people.ldif

dn: ou=people,dc=zirndorf,dc=de

ou: people

objectClass: top

objectClass: organizationalUnit

and import it into the database

# ldapadd -a -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

-f /tmp/ou_people.ldif

Did the import work? You can dump your whole LDAP-server with this command to check it:

# ldapsearch -x -b 'dc=zirndorf,dc=de' 'objectclass=*' -h ldap.zdf

...

dn: ou=people,dc=zirndorf,dc=de

ou: people

objectClass: top

objectClass: organizationalUnit

...

If you have more units like this create more line in ou_people.ldif, some copy the lines I gave you.

Create people in ou=people,dc=zirndorf,dc=de

First create a very simple file to import into LDAP. I did this with Perl from a centralized database where I have all the informations collected:

# the unique name in the directory

dn: cn=Roland Wende, ou=people, dc=zirndorf, dc=de

ou: people

# which schemas to use

objectClass: top

objectClass: person

objectClass: organizationalPerson

objectClass: inetOrgPerson

# the data itself, name-data

cn: Roland Wende

gn: Roland

sn: Wende

# other data (could be more, but doesn't have to be more)

mail: roland.wende@zirndorf.de

telephoneNumber: 9600-190

Now let's create a person with more attributes:

# the unique name in the directory

dn: cn=Richard Lippmann,ou=people,dc=zirndorf,dc=de

# which schema to use

objectClass: top

objectClass: person

objectClass: organizationalPerson

objectClass: inetOrgPerson

cn: Richard Lippmann

givenName: Richard

sn: Lippmann

# internet section

mail: lippmann@zirndorf.de

mail: horshack@lisa.franken.de

# address section, private

postalAddress: My Way 5

postalCode: 90522

l: Oberasbach

# phone section

homePhone: 0911 /123 456 789

mobile: 0179 / 123 123 123

Commands to delete and modify records

To delete a record this you have to know the dn (unique record-identifier):

# ldapdelete -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

'cn=Roland Wende,ou=People,dc=zirndorf,dc=de'

Recursively delete: ldapdelete -r ...

If you want to modify a record you have to modify it completely. ALL the attributes must be in your ldif-file!

# ldapmodify -x \

-D "cn=admin,dc=zirndorf,dc=de" -w secret \

-h ldap.zdf \

-f /tmp/ou_people.ldif

Query the LDAP-database from your mailprogram, how to configure

You have to know:

* your LDAP-server's hostname (ldap.zdf)

* your base-DSN (dc=zirndorf,dc=de)

* Port (389 for cleartext)

Posted by iCehaNgeR's hAcK NoteS at 8:03 AM 1 comments

Monday, July 23, 2007

vi Set number and copy line between number

:set number copy lines from address to destination. eg. :1,10co50 copy lines 1 to to 10 to below line 50

Posted by iCehaNgeR's hAcK NoteS at 1:40 PM 3 comments

Monitor a log file in linux

tail -f /var/log/somelogfile.log This will show the last entry in the log file as updated.

Posted by iCehaNgeR's hAcK NoteS at 1:25 PM 0 comments

Thursday, July 19, 2007

Linux Networking

Network not connecting using wired network (lo) When you switch between the networks like home to office. The dns setting remains unchanged sometimes and this makes the network not to connect. Change the dns setting by ./network-admin go to the dns setting and under the search domain add the dns of the ISP(parent network) then issue the ./etc/init.d/networking restart. give a ping to google.com. If the packet are not lost you are good to go. There is another way you can prepend the new domain name in the /etc/dhcp3/dhcpclient.con file. open vi /s prepend and add the new dhcp domain name there .example "mydomain.com,"; This will make new domain available during each network startup

Posted by iCehaNgeR's hAcK NoteS at 7:38 AM 0 comments

Monday, June 18, 2007

JSR 168 Portlet life cycle

The basic portlet life cycle of a JSR 168 portlet is: The portlet container manages the portlet life cycle and calls the corresponding methods on the portlet interface. Every portlet must implement the portlet interface, or extend a class that implements the portlet interface. The portlet interface consists of the following methods: A portlet mode indicates the function a portlet performs. Usually, portlets execute different tasks and create different content depending on the functions they currently perform. A portlet mode advises the portlet what task it should perform and what content it should generate. When invoking a portlet, the portlet container provides the current portlet mode to the portlet. Portlets can programmatically change their mode when processing an action request. JSR 168 splits portlet modes into three categories: A window state indicates the amount of portal page space that will be assigned to the content generated by a portlet. When invoking a portlet, the portlet container provides the current window state to the portlet. The portlet may use the window state to decide how much information it should render. Portlets can programmatically change their window state when processing an action request. JSR 168 defines the following window states:Portlet life cycle

Portlet interface

init(PortletConfig config): to initialize the portlet. This method is called only once after instantiating the portlet. This method can be used to create expensive objects/resources used by the portlet. processAction(ActionRequest request, ActionResponse response): to notify the portlet that the user has triggered an action on this portlet. Only one action per client request is triggered. In an action, a portlet can issue a redirect, change its portlet mode or window state, modify its persistent state, or set render parameters. render(RenderRequest request, RenderResponse response): to generate the markup. For each portlet on the current page, the render method is called, and the portlet can produce markup that may depend on the portlet mode or window state, render parameters, request attributes, persistent state, session data, or backend data. destroy(): to indicate to the portlet the life cycle's end. This method allows the portlet to free up resources and update any persistent data that belongs to this portlet.Portlet modes

Window states

Posted by iCehaNgeR's hAcK NoteS at 4:29 PM 9 comments

Friday, June 8, 2007

Spring Hibernate FAQ

| Q. Explain DI or IOC pattern. A: Dependency injection (DI) is a programming design pattern and architectural model, sometimes also referred to as inversion of control or IOC, although technically speaking, dependency injection specifically refers to an implementation of a particular form of IOC. Dependancy Injection describes the situation where one object uses a second object to provide a particular capacity. For example, being passed a database connection as an argument to the constructor instead of creating one internally. The term "Dependency injection" is a misnomer, since it is not a dependency that is injected, rather it is a provider of some capability or resource that is injected. There are three common forms of dependency injection: setter-, constructor- and interface-based injection. Dependency injection is a way to achieve loose coupling. Inversion of control (IOC) relates to the way in which an object obtains references to its dependencies. This is often done by a lookup method. The advantage of inversion of control is that it decouples objects from specific lookup mechanisms and implementations of the objects it depends on. As a result, more flexibility is obtained for production applications as well as for testing. Q. What are the different IOC containers available? A. Spring is an IOC container. Other IOC containers are HiveMind, Avalon, PicoContainer. Q. What are the different types of dependency injection. Explain with examples. A: There are two types of dependency injection: setter injection and constructor injection. Setter Injection: Normally in all the java beans, we will use setter and getter method to set and get the value of property as follows: public class namebean {

String name;

public void setName(String a) {

name = a; }

public String getName() {

return name; }

}

We will create an instance of the bean 'namebean' (say bean1) and set property as bean1.setName("tom"); Here in setter injection, we will set the property 'name' in spring configuration file as showm below:

Constructor injection: For constructor injection, we use constructor with parameters as shown below, public class namebean {

String name;

public namebean(String a) {

name = a;

}

}

We will set the property 'name' while creating an instance of the bean 'namebean' as namebean bean1 = new namebean("tom");

Here we use the Q. What is spring? What are the various parts of spring framework? What are the different persistence frameworks which could be used with spring? A. Spring is an open source framework created to address the complexity of enterprise application development. One of the chief advantages of the Spring framework is its layered architecture, which allows you to be selective about which of its components you use while also providing a cohesive framework for J2EE application development. The Spring modules are built on top of the core container, which defines how beans are created, configured, and managed, as shown in the following figure. Each of the modules (or components) that comprise the Spring framework can stand on its own or be implemented jointly with one or more of the others. The functionality of each component is as follows: The core container: The core container provides the essential functionality of the Spring framework. A primary component of the core container is the BeanFactory, an implementation of the Factory pattern. The BeanFactory applies the Inversion of Control (IOC) pattern to separate an application’s configuration and dependency specification from the actual application code. Spring context: The Spring context is a configuration file that provides context information to the Spring framework. The Spring context includes enterprise services such as JNDI, EJB, e-mail, internalization, validation, and scheduling functionality. Spring AOP: The Spring AOP module integrates aspect-oriented programming functionality directly into the Spring framework, through its configuration management feature. As a result you can easily AOP-enable any object managed by the Spring framework. The Spring AOP module provides transaction management services for objects in any Spring-based application. With Spring AOP you can incorporate declarative transaction management into your applications without relying on EJB components. Spring DAO: The Spring JDBC DAO abstraction layer offers a meaningful exception hierarchy for managing the exception handling and error messages thrown by different database vendors. The exception hierarchy simplifies error handling and greatly reduces the amount of exception code you need to write, such as opening and closing connections. Spring DAO’s JDBC-oriented exceptions comply to its generic DAO exception hierarchy. Spring ORM: The Spring framework plugs into several ORM frameworks to provide its Object Relational tool, including JDO, Hibernate, and iBatis SQL Maps. All of these comply to Spring’s generic transaction and DAO exception hierarchies. Spring Web module: The Web context module builds on top of the application context module, providing contexts for Web-based applications. As a result, the Spring framework supports integration with Jakarta Struts. The Web module also eases the tasks of handling multi-part requests and binding request parameters to domain objects. Spring MVC framework: The Model-View-Controller (MVC) framework is a full-featured MVC implementation for building Web applications. The MVC framework is highly configurable via strategy interfaces and accommodates numerous view technologies including JSP, Velocity, Tiles, iText, and POI. Q. What is AOP? How does it relate with IOC? What are different tools to utilize AOP? A: Aspect-oriented programming, or AOP, is a programming technique that allows programmers to modularize crosscutting concerns, or behavior that cuts across the typical divisions of responsibility, such as logging and transaction management. The core construct of AOP is the aspect, which encapsulates behaviors affecting multiple classes into reusable modules. AOP and IOC are complementary technologies in that both apply a modular approach to complex problems in enterprise application development. In a typical object-oriented development approach you might implement logging functionality by putting logger statements in all your methods and Java classes. In an AOP approach you would instead modularize the logging services and apply them declaratively to the components that required logging. The advantage, of course, is that the Java class doesn't need to know about the existence of the logging service or concern itself with any related code. As a result, application code written using Spring AOP is loosely coupled. The best tool to utilize AOP to its capability is AspectJ. However AspectJ works at he byte code level and you need to use AspectJ compiler to get the aop features built into your compiled code. Nevertheless AOP functionality is fully integrated into the Spring context for transaction management, logging, and various other features. In general any AOP framework control aspects in three possible ways: Joinpoints: Points in a program's execution. For example, joinpoints could define calls to specific methods in a class

Pointcuts: Program constructs to designate joinpoints and collect specific context at those points

Advices: Code that runs upon meeting certain conditions. For example, an advice could log a message before executing a joinpoint

Q. What are the advantages of spring framework? A.

Q. Can you name a tool which could provide the initial ant files and directory structure for a new spring project. A: Appfuse or equinox. Q. Explain BeanFactory in spring. A: Bean factory is an implementation of the factory design pattern and its function is to create and dispense beans. As the bean factory knows about many objects within an application, it is able to create association between collaborating objects as they are instantiated. This removes the burden of configuration from the bean and the client. There are several implementation of BeanFactory. The most useful one is "org.springframework.beans.factory.xml.XmlBeanFactory" It loads its beans based on the definition contained in an XML file. To create an XmlBeanFactory, pass a InputStream to the constructor. The resource will provide the XML to the factory. BeanFactory factory = new XmlBeanFactory(new FileInputStream("myBean.xml")); This line tells the bean factory to read the bean definition from the XML file. The bean definition includes the description of beans and their properties. But the bean factory doesn't instantiate the bean yet. To retrieve a bean from a 'BeanFactory', the getBean() method is called. When getBean() method is called, factory will instantiate the bean and begin setting the bean's properties using dependency injection. myBean bean1 = (myBean)factory.getBean("myBean"); Q. Explain the role of ApplicationContext in spring. A. While Bean Factory is used for simple applications, the Application Context is spring's more advanced container. Like 'BeanFactory' it can be used to load bean definitions, wire beans together and dispense beans upon request. It also provide 1) a means for resolving text messages, including support for internationalization.

2) a generic way to load file resources.

3) events to beans that are registered as listeners.

Because of additional functionality, 'Application Context' is preferred over a BeanFactory. Only when the resource is scarce like mobile devices, 'BeanFactory' is used. The three commonly used implementation of 'Application Context' are 1. ClassPathXmlApplicationContext : It Loads context definition from an XML file located in the classpath, treating context definitions as classpath resources. The application context is loaded from the application's classpath by using the code

ApplicationContext context = new ClassPathXmlApplicationContext("bean.xml");

2. FileSystemXmlApplicationContext : It loads context definition from an XML file in the filesystem. The application context is loaded from the file system by using the code

ApplicationContext context = new FileSystemXmlApplicationContext("bean.xml");

3. XmlWebApplicationContext : It loads context definition from an XML file contained within a web application.

Q. How does Spring supports DAO in hibernate? A. Spring’s HibernateDaoSupport class is a convenient super class for Hibernate DAOs. It has handy methods you can call to get a Hibernate Session, or a SessionFactory. The most convenient method is getHibernateTemplate(), which returns a HibernateTemplate. This template wraps Hibernate checked exceptions with runtime exceptions, allowing your DAO interfaces to be Hibernate exception-free. Example: public class UserDAOHibernate extends HibernateDaoSupport {

public User getUser(Long id) {

return (User) getHibernateTemplate().get(User.class, id);

}

public void saveUser(User user) {

getHibernateTemplate().saveOrUpdate(user);

if (log.isDebugEnabled()) {

log.debug(“userId set to: “ + user.getID());

}

}

public void removeUser(Long id) {

Object user = getHibernateTemplate().load(User.class, id);

getHibernateTemplate().delete(user);

}

}

Q. What are the id generator classes in hibernate?

A: increment: It generates identifiers of type long, short or int that are unique only when no other process is inserting data into the same table. It should not the used in the clustered environment.

identity: It supports identity columns in DB2, MySQL, MS SQL Server, Sybase and HypersonicSQL. The returned identifier is of type long, short or int.

sequence: The sequence generator uses a sequence in DB2, PostgreSQL, Oracle, SAP DB, McKoi or a generator in Interbase. The returned identifier is of type long, short or int

hilo: The hilo generator uses a hi/lo algorithm to efficiently generate identifiers of type long, short or int, given a table and column (by default hibernate_unique_key and next_hi respectively) as a source of hi values. The hi/lo algorithm generates identifiers that are unique only for a particular database. Do not use this generator with connections enlisted with JTA or with a user-supplied connection.

seqhilo: The seqhilo generator uses a hi/lo algorithm to efficiently generate identifiers of type long, short or int, given a named database sequence.

uuid: The uuid generator uses a 128-bit UUID algorithm to generate identifiers of type string, unique within a network (the IP address is used). The UUID is encoded as a string of hexadecimal digits of length 32.

guid: It uses a database-generated GUID string on MS SQL Server and MySQL.

native: It picks identity, sequence or hilo depending upon the capabilities of the underlying database.

assigned: lets the application to assign an identifier to the object before save() is called. This is the default strategy if no 1. An interface that defines the functions.

2. An Implementation that contains properties, its setter and getter methods, functions etc.,

3. A XML file called Spring configuration file.

4. Client program that uses the function.

Q. How do you define hibernate mapping file in spring? A. Add the hibernate mapping file entry in mapping resource inside Spring’s applicationContext.xml file in the web/WEB-INF directory. Q. How do you configure spring in a web application? A. It is very easy to configure any J2EE-based web application to use Spring. At the very least, you can simply add Spring’s ContextLoaderListener to your web.xml file: Q. Can you have xyz.xml file instead of applicationcontext.xml?

A. ContextLoaderListener is a ServletContextListener that initializes when your webapp starts up. By default, it looks for Spring’s configuration file at WEB-INF/applicationContext.xml. You can change this default value by specifying a Q. How do you configure your database driver in spring? A. Using datasource "org.springframework.jdbc.datasource.DriverManagerDataSource". Example: Q. How can you configure JNDI instead of datasource in spring applicationcontext.xml? A. Using "org.springframework.jndi.JndiObjectFactoryBean". Example: Q. What are the key benifits of Hibernate? A: These are the key benifits of Hibernate:

Q. What is hibernate session and session factory? How do you configure sessionfactory in spring configuration file? A. Hibernate Session is the main runtime interface between a Java application and Hibernate. SessionFactory allows applications to create hibernate session by reading hibernate configurations file hibernate.cfg.xml. // Initialize the Hibernate environment

Configuration cfg = new Configuration().configure();

// Create the session factory

SessionFactory factory = cfg.buildSessionFactory();

// Obtain the new session object

Session session = factory.openSession();

The call to Configuration().configure() loads the hibernate.cfg.xml configuration file and initializes the Hibernate environment. Once the configuration is initialized, you can make any additional modifications you desire programmatically. However, you must make these modifications prior to creating the SessionFactory instance. An instance of SessionFactory is typically created once and used to create all sessions related to a given context. The main function of the Session is to offer create, read and delete operations for instances of mapped entity classes. Instances may exist in one of three states: transient: never persistent, not associated with any Session

persistent: associated with a unique Session

detached: previously persistent, not associated with any Session

A Hibernate Session object represents a single unit-of-work for a given data store and is opened by a SessionFactory instance. You must close Sessions when all work for a transaction is completed. The following illustrates a typical Hibernate session: Session session = null; UserInfo user = null; Transaction tx = null; try { session = factory.openSession(); tx = session.beginTransaction(); user = (UserInfo)session.load(UserInfo.class, id); tx.commit(); } catch(Exception e) { if (tx != null) { try { tx.rollback(); } catch (HibernateException e1) { throw new DAOException(e1.toString()); } } throw new DAOException(e.toString()); } finally { if (session != null) { try { session.close(); } catch (HibernateException e) { } } } Q. What is the difference between hibernate get and load methods? A. The load() method is older; get() was added to Hibernate’s API due to user request. The difference is trivial: The following Hibernate code snippet retrieves a User object from the database: User user = (User) session.get(User.class, userID); The get() method is special because the identifier uniquely identifies a single instance of a class. Hence it’s common for applications to use the identifier as a convenient handle to a persistent object. Retrieval by identifier can use the cache when retrieving an object, avoiding a database hit if the object is already cached. Hibernate also provides a load() method: User user = (User) session.load(User.class, userID); If load() can’t find the object in the cache or database, an exception is thrown. The load() method never returns null. The get() method returns null if the object can’t be found. The load() method may return a proxy instead of a real persistent instance. A proxy is a placeholder instance of a runtime-generated subclass (through cglib or Javassist) of a mapped persistent class, it can initialize itself if any method is called that is not the mapped database identifier getter-method. On the other hand, get() never returns a proxy. Choosing between get() and load() is easy: If you’re certain the persistent object exists, and nonexistence would be considered exceptional, load() is a good option. If you aren’t certain there is a persistent instance with the given identifier, use get() and test the return value to see if it’s null. Using load() has a further implication: The application may retrieve a valid reference (a proxy) to a persistent instance without hitting the database to retrieve its persistent state. So load() might not throw an exception when it doesn’t find the persistent object in the cache or database; the exception would be thrown later, when the proxy is accessed. Q. What type of transaction management is supported in hibernate? A. Hibernate communicates with the database via a JDBC Connection; hence it must support both managed and non-managed transactions. non-managed in web containers: managed in application server using JTA: Q. What is lazy loading and how do you achieve that in hibernate? A. Lazy setting decides whether to load child objects while loading the Parent Object. You need to specify parent class.Lazy = true in hibernate mapping file. By default the lazy loading of the child objects is true. This make sure that the child objects are not loaded unless they are explicitly invoked in the application by calling getChild() method on parent. In this case hibernate issues a fresh database call to load the child when getChild() is actully called on the Parent object. But in some cases you do need to load the child objects when parent is loaded. Just make the lazy=false and hibernate will load the child when parent is loaded from the database. Examples: Address child of User class can be made lazy if it is not required frequently. But you may need to load the Author object for Book parent whenever you deal with the book for online bookshop. Hibernate does not support lazy initialization for detached objects. Access to a lazy association outside of the context of an open Hibernate session will result in an exception. Q. What are the different fetching strategy in Hibernate? A. Hibernate3 defines the following fetching strategies: Join fetching - Hibernate retrieves the associated instance or collection in the same SELECT, using an OUTER JOIN.

Select fetching - a second SELECT is used to retrieve the associated entity or collection. Unless you explicitly disable lazy fetching by specifying lazy="false", this second select will only be executed when you actually access the association.

Subselect fetching - a second SELECT is used to retrieve the associated collections for all entities retrieved in a previous query or fetch. Unless you explicitly disable lazy fetching by specifying lazy="false", this second select will only be executed when you actually access the association.Batch fetching - an optimization strategy for select fetching - Hibernate retrieves a batch of entity instances or collections in a single SELECT, by specifying a list of primary keys or foreign keys.

For more details read short primer on fetching strategy at http://www.hibernate.org/315.html

Q. What are different types of cache hibernate supports ? A. Caching is widely used for optimizing database applications. Hibernate uses two different caches for objects: first-level cache and second-level cache. First-level cache is associated with the Session object, while second-level cache is associated with the Session Factory object. By default, Hibernate uses first-level cache on a per-transaction basis. Hibernate uses this cache mainly to reduce the number of SQL queries it needs to generate within a given transaction. For example, if an object is modified several times within the same transaction, Hibernate will generate only one SQL UPDATE statement at the end of the transaction, containing all the modifications. To reduce database traffic, second-level cache keeps loaded objects at the Session Factory level between transactions. These objects are available to the whole application, not just to the user running the query. This way, each time a query returns an object that is already loaded in the cache, one or more database transactions potentially are avoided. In addition, you can use a query-level cache if you need to cache actual query results, rather than just persistent objects. The query cache should always be used in conjunction with the second-level cache. Hibernate supports the following open-source cache implementations out-of-the-box:

Q. What are the different caching strategies? A. The following four caching strategies are available:

Q. How do you configure 2nd level cach in hibernate?

A. To activate second-level caching, you need to define the hibernate.cache.provider_class property in the hibernate.cfg.xml file as follows: Q. What is the difference between sorted and ordered collection in hibernate? A. A sorted collection is sorted in-memory using java comparator, while order collection is ordered at the database level using order by clause. Example: Let us take the simple example of 3 java classes. Class Manager and Worker are inherited from Employee Abstract class.

1. Table per concrete class with unions : In this case there will be 2 tables. Tables: Manager, Worker [all common attributes will be duplicated]

2. Table per class hierarchy: Single Table can be mapped to a class hierarchy. There will be only one table in database called 'Employee' that will represent all the attributes required for all 3 classes. But it needs some discriminating column to differentiate between Manager and worker;

3. Table per subclass: In this case there will be 3 tables represent Employee, Manager and Worker |

Posted by iCehaNgeR's hAcK NoteS at 2:19 PM 4 comments

Thursday, June 7, 2007

Versions

JDK 1.4 and 1.5 Struts 1.3 Hibernet 3.1.3 Spring 1.2.8 Apache Tomcat Eclipse RAD WAS

Posted by iCehaNgeR's hAcK NoteS at 8:40 PM 0 comments

UML diagrams

http://www.holub.com/goodies/uml/

Posted by iCehaNgeR's hAcK NoteS at 8:39 PM 0 comments

Webservices

1. How to implement Webservice. steps.

Posted by iCehaNgeR's hAcK NoteS at 8:39 PM 0 comments

Patterns

1.Different types of patterns.

Posted by iCehaNgeR's hAcK NoteS at 8:38 PM 0 comments

JMS.

1.How to do lookup in JMS.

JMS API Architecture

A JMS application is composed of the following parts.

Pub/sub messaging has the following characteristics.

- Each message can have multiple consumers.

- Publishers and subscribers have a timing dependency. A client that subscribes to a topic can consume only messages published after the client has created a subscription, and the subscriber must continue to be active in order for it to consume messages.

/*

* Look up connection factory and queue. If either does

* not exist, exit.

*/

try {

queueConnectionFactory = (QueueConnectionFactory)

jndiContext.lookup("java:comp/env/jms/MyQueueConnectionFactory");

queue = (Queue)

jndiContext.lookup("java:comp/env/jms/QueueName");

} catch (NamingException e) {

System.out.println("JNDI lookup failed: " +

e.toString());

System.exit(1);

}

/*

* Create connection.

* Create session from connection; false means session is

* not transacted.

* Create sender and text message.

* Send messages, varying text slightly.

* Finally, close connection.

*/

try {

queueConnection =

queueConnectionFactory.createQueueConnection();

queueSession =

queueConnection.createQueueSession(false,

Session.AUTO_ACKNOWLEDGE);

queueSender = queueSession.createSender(queue);

message = queueSession.createTextMessage();

for (int i = 0; i < NUM_MSGS; i++) { message.setText("This is message " + (i + 1)); System.out.println("Sending message: " + message.getText()); queueSender.send(message); }

Receiving Message Using MDB

---------------------------

- public interface TextMessage extends Message

ATextMessageobject is used to send a message containing ajava.lang.String. It inherits from theMessageinterface and adds a text message body. implement the Message Driven Bean with implements MessageDrivenBean, MessageListener /** * onMessage method, declared as public (but not final or * static), with a return type of void, and with one argument * of type javax.jms.Message. * * Casts the incoming Message to a TextMessage and displays * the text. * * @param inMessage the incoming message */ public void onMessage(Message inMessage) { TextMessage msg = null; try { if (inMessage instanceof TextMessage) { msg = (TextMessage) inMessage; System.out.println("MESSAGE BEAN: Message " + "received: " + msg.getText()); } else { System.out.println("Message of wrong type: " + inMessage.getClass().getName()); } } catch (JMSException e) { System.err.println("MessageBean.onMessage: " + "JMSException: " + e.toString()); mdc.setRollbackOnly(); } catch (Throwable te) { System.err.println("MessageBean.onMessage: " + "Exception: " + te.toString()); } } } catch (JMSException e) {

Posted by iCehaNgeR's hAcK NoteS at 8:38 PM 0 comments

JDBC

1. How to do JDBC connection 2. Prepared statement

Posted by iCehaNgeR's hAcK NoteS at 8:37 PM 0 comments

EJB

BMP and CMB 1. Methods in EJB 2. EJB life cycle

Stateless session bean life cycle

Life cycle means when an EJBean is created and removed by EJB Container, and when the Container calls methods of EJBean. The following figure illustrates the life cycle of Stateless session bean.

You can control life cycle by mentioning instance pool size in vendor specific deployment descriptor. For example weblogic server's weblogic-ejb-jar.xml has element for instance pool size

and JBoss server's jboss.xml has an element

When the server starts up, the EJB Container/Server creates 50 beans using Class.newInstance() method and puts it in the pool and it calls the following call back methods of the bean.

setSessionContext(ctx) and

ejbCreate() methods

these 50 beans are ready to be accessed by 50 clients concurrently. If the client accesses exceed 50 then the Container creates beans and calls the above methods till the number of beans is equal to 100 (maximum beans in the pool). So at any time the Container can only have a maximum of 100 beans to serve clients. What will happen if the concurrent clients are more than 100? Then the container puts clients in the queue. We will discuss about this in the next section on how you can tune the pool size.

The Container removes the beans from the pool if the number of clients accessing beans are less. When the Container removes the beans depending on its specific algorithms ( perhaps LRU, Least Recently Used). At this time Container calls ejbRemove() method of the bean.

If the client calls the home.create() method, the Container creates EJBObject and assigns existing bean from the pool to the the client, at this time client neither creates a bean nor calls ejbCreate() method because it is already called when it created and is put in the pool. In the same manner, if the client calls home.remove() / remote.remove() method, the Container removes EJBObject and deassigns the bean from the client and puts it back to the pool but does not remove from the pool. In Stateless session bean life cycle, Client does not have control over bean's life cycle and bean's call back methods, it does not know when the creation, destruction and call back methods occur. So In Stateless session beans the creation, destruction and call back methods depend upon the pool size, clients concurrent access and Container algorithms.

As the name implies, a Stateless session bean does not maintain any state ( instance variables values) across methods, that is the reason why ejbActivate() and ejbPassivate() methods do not have significance in Stateless session bean. So the Container can assign different beans from the pool to the client for successive methods.

With this discussion, we understand the importance of pool size and when the call back methods are executed in Stateless session beans. Now let us discuss how we can tune Stateless session beans.

Tune Stateless session beans instance pool size

The creation and destruction of beans is expensive. To reduce this cost, The EJB Container/Server creates pool of beans that depending upon vendor specific configuration, you need to give a proper value for this pool size to increase performance. As we discussed above, configure pool size, for example weblogic's weblogic-ejb-jar.xml has an element

The number of maximum beans in pool impacts performance. If this is less, then the Container has to put the clients in the queue when the number of clients accessing is more than the maximum pool size. This degrades the performance and clients take more time to execute. For best performance, give maximum beans as equal to number of maximum clients accesses.

Stateful session bean life cycle

The life cycle of Stateful and Stateless bean is differs. The reason is, Stateful session bean has to maintain state (instance variables values) across the methods for a client. That means once a client assigns values for instance variables using one method, those values are available for other methods also. The following figure illustrates the life cycle of Stateful session bean.

Figure7: Stateful session bean life cycle

Here you see the instance cache instead of instance pool. Cache maintains beans that have state (Stateful) whereas pool maintains beans that don't have state (Stateless). You can control life cycle by describing instance cache size in vendor specific deployment descriptor file. For example weblogic's weblogic-ejb-jar.xml has element for instance cache size

and JBoss server's jboss.xml has an element

For detailed information, look at their documentation and for other servers look at vendors documentation for instance cache configuration.